How student judgment is to be scored by Common Core State

Standards assessments has yet to be finalized. How student judgment can be scored

is related to time and cost. There is little additional cost when integrated

into classroom instruction (in person or by way of software), as formative

assessment, with an instant to one-day feedback. Weekly and biweekly classroom

tests take additional time. Summative standardized tests take even more time.

Common Core State Standards tests will be summative

standardized tests. The selection of questions for all types of tests is

subjective. The easiest type of test to score is the multiple-choice or

selected response test. All other types of tests require subjective scoring as

well as subjective selection of items for the test.

The multiple-choice test is the least expensive to score.

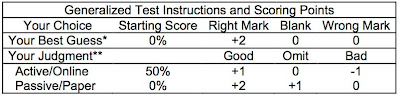

The traditional scoring by only counting right marks eliminates student

judgment playing a part in the assessment. A simple change in the test

instructions puts student judgment into the assessment where judgment can carry

the same weight as knowing and doing.

* Mark every

question even if you must guess. Your judgment of what you know and can do

(what is meaningful, useful, and empowering) has no value.

** Only mark to report what you trust you know or can do.

Your judgment and what you know have equal value (an accurate, honest, and fair

assessment).

Traditional right count scoring treats each student, each

question, and each answer option with equal value. This simplifies the

statistical manipulations of student marks. This is a common psychometric

practice when you do not fully know what you are doing. It produces useable

rankings based upon how groups of students perform on a test; which is

something different from being based upon what individual students actually know

or can do (what teachers and students need to know in the classroom).

This problem increases as the test score decreases. We have

a fair idea of what a student knows with a test score of 75% (about 3/4 of the

time a right mark is a right answer). At a test score of 50%, half of the right

marks can be from luck on test day.

These two problems almost vanish when student judgment is

included in the alternative multiple-choice assessment. Independent scores for

knowledge and judgment (quantity and quality) indicate what a student knows and

to what extent it can be trusted at every score level. This provides the same type

of information as is traditionally associated with subjectively scored alternative

assessments that all champion student judgment (short answer, essay, project,

report, and folder).

Multiple-choice tests can cover all levels of thinking. They

can be done in relatively short periods of time. They can be specifically

targeted. Folders can cover long time periods and provide an appropriate amount

of time for each activity (as can class projects and reports).

Standardized test exercises run into trouble when answering a question is so involved and time is so limited that the announced purpose of demonstrating creativity and innovation cannot take place in a normal way. My own experience with creativity and innovation is that it takes several days to years. These types of assessments IMHO then become a form of IQ test when students are forced to perform in a few hours.

Quantity and quality scoring can be applied to alternative

assessments by counting information bits, in general, a simple sentence. It can

also be a key relationship, sketch, diagram, or performance; any kernel of

information or performance that makes sense. The scoring is as simple as when

applied to multiple-choice.

Active scoring starts with one half of the value of the

question (I generally used 10 points for essay questions which produced a range

of zero to 20 points for an exercise taking about 10 minutes). Then add one

point for each acceptable information bit. Subtract one point for each

unacceptable information bit. Fluff, filler, and snow count zero.

Quantity and quality scoring and rubrics merge when

acceptable information bits become synonymous. Rubrics can place preconceived

limits (unknown to the student) on what is to be counted. With both methods,

possible responses that are not made count as zero. Possible responses that are

made that are not included in a rubric are not counted, but are counted with

quantity and quality scoring. In this way quantity and quality scoring is more

responsive to creativity and innovation. The down side of quantity and quality

scoring, applied to alternative assessments (other than to multiple-choice), is

that it includes the same subjective judgment of a scorer working with rubrics.

Standardized multiple-choice tests have been over marketed

for years. The first generation of alternative and authentic tests also failed.

This gave rise to folders and the return of right mark scored multiple-choice.

The current generation of Common Core State Standards alternative tests appears

to again be over marketed.

We want to capture in numbers what students know and can do

and their ability to make use of that knowledge and skill. Learning and

reporting on any good classroom assignment is an authentic learning academic

exercise. The idea that only what goes on outside the classroom is authentic is

IMHO a very misguided concept. It directs attention away from the very problems

created by an emphasis on teaching rather than on meeting each student’s need

to catch up, to succeed each day, and to contribute to the class.

The idea that only a standardized test can provide needed

direction for instruction is also a misguided concept. It belittles teachers.

It is currently impossible to perform as marketed unless carried out online.

Feedback must be within the critical time that positive reinforcement is

achieved. At lower levels of thinking that feedback must be in seconds. At

higher levels of thinking, with high quality students, feedback that takes up to

several days can still be effective.

Common Core State Standards assessments must include student

judgment. They must meet the requirements imposed by student development.

Multiple-choice (that is not forced choice, but really is multiple-choice, such

as the partial credit Rasch

model IRT and Knowledge and Judgment

Scoring) and all the other alternative assessments include student

judgment.

All students are familiar with multiple-choice scoring

(count right, wrong and omit marks). Few students are aware of the rubrics

created to improve the reliability of subjectively scored tests. This again

leaves the multiple-choice test as the fairest form of quick and directed assessment

when students can exercise their judgment in selecting questions to report what

they trust they actually know and can do.

For me, it gave me a better sense of what the class and each

student knew and could do (and as importantly, did not know and could not do)

as reading 100 essay tests. Knowledge and Judgment Scoring does a superior job

of highlighting misconceptions and grouping students by specific learning

problems in classes over 20 students.

No comments:

Post a Comment