7

We have now gone from the real world of counting and test

scores through three stages (average, standard deviation and test reliability)

of calculating and relating averages. The next step is to return as best as

these abstract statistics can to the real world. The problem is that they only

see, represent, the real world as portions of the normal curve of error (Standard

error and Standard error vs.

Standard error of measurement). They no longer see individual test scores.

If a student could take the same test several times, the

scores would form a distribution with a mean and standard deviation (SD). That

mean would be a best estimate of the student’s true score on that test. The SD

would indicate the range of expected measurements. Some 2/3 of the time the

next test score is expected to fall within one SD of the mean.

[This makes the same sense as a person going to a baseball

game to watch a batter who has averaged a hit 50% of the time in his last 20

games. That is a descriptive use of the statistic (0.500). If the person bets

the batter will do the same in the current game; that is a predictive use of

the same statistic (0.500). “Past performance is no guarantee of future

performance.” The SD gives us a “ballpark” idea of what may happen. The

following statistic promises a better idea.]

The standard error of

measurement (SEM), statistic five in this series,

estimates an on average student score range centered on the class mean. It is

not the specific location of the next expected test score. It is not a range

tailored to each student. It is tailored to the average student in the class.

The accuracy and precision of this estimate is important. The

range of the SEM sets the limit on how much of an increase in test score is

needed, from one year to the next, to be significant. The smaller the range,

the finer the resolution.

Students, of course, do not retake the same test many times

to generate the needed scores for averaging. The best the psychometricians can

do is to estimate the SEM using the SD of student scores (2.07) and the test

reliability (0.29) in Table 10.

Students, of course, do not retake the same test many times

to generate the needed scores for averaging. The best the psychometricians can

do is to estimate the SEM using the SD of student scores (2.07) and the test

reliability (0.29) in Table 10.

SEM = SQRT(MSrow) * SQRT(1 – KR20)

SEM = SQRT(4.28)*SQRT(1 – 0.29)

SEM = 2.07 * SQRT(0.71)

SEM = 2.07 * 0.84 = 1.75 or 8.31%

A portion of the average, N – 1, student score Variance (MSrow

in the far right column on Table 10 of 4.28 or the SD of 2.07) is used to

estimate the SEM. The portion is

determined by the test reliability, KR20 (0.29). The SEM for the Nurse124 data

(1.75) can also be expressed as 8.31% (Table 10).

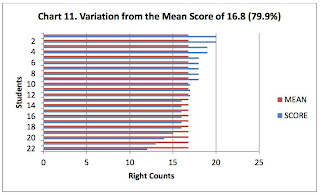

The SD for the student score mean (0.799 * 100 = 79.87%) was

9.85% (2.07/21). With a test reliability of only 0.29, the SEM is little better

(smaller) than the score SD (SD 9.85% and SEM 8.31%).

Charts 15 and 16 show what the above actually looks like in

the normal world. Chart 15 is for

a set of data (Nursing124) that is just below the boundary of the 5% level of

significance by the ANOVA F test. The F test was 1.31 and the critical value

was 1.62. The SEM curve (1.75 or 8.31%) is close to the normal SD of the average

test score (2.07 or 9.85%). The SEM is only a 15.63% reduction from the SD.

Charts 15 and 16 show what the above actually looks like in

the normal world. Chart 15 is for

a set of data (Nursing124) that is just below the boundary of the 5% level of

significance by the ANOVA F test. The F test was 1.31 and the critical value

was 1.62. The SEM curve (1.75 or 8.31%) is close to the normal SD of the average

test score (2.07 or 9.85%). The SEM is only a 15.63% reduction from the SD. Chart 16 is for a set of data (Cantrell) that is well above

the boundary of the 5% level of significance by the ANOVA F test. The F test

was 3.28 with a critical value of 2.04. The SEM curve (1.21 or 8.68%) is much

narrower than the normal SD of the average test score (2.55 or 18.19%). The SEM

is a 52.55% reduction from the SD.

Chart 16 is for a set of data (Cantrell) that is well above

the boundary of the 5% level of significance by the ANOVA F test. The F test

was 3.28 with a critical value of 2.04. The SEM curve (1.21 or 8.68%) is much

narrower than the normal SD of the average test score (2.55 or 18.19%). The SEM

is a 52.55% reduction from the SD.

This makes sense. It follows that the higher the test

reliability, the lower (the shorter the range of) the SEM on a normal scale. Do

these statistics really mean this? Most psychometricions believe they do.

[Descriptive

statistics are used in the classroom on each test. Rarely are specific

predictions made. Standardized tests are marketed by their test reliability and

SEM. This is the same change IMHO as changing from amateur to professional in

sports. It is no longer how you play the game and having fun but winning. Every

possible observation is subject to examination.]

I again made use of the process of deleting and restoring

one item at a time to take a peek at how these statistics interact. [SEMEngine, Table

10, is hosted free at http://www.nine-patch.com/download/SEMEngine.xlsm

and .xls.]

I again made use of the process of deleting and restoring

one item at a time to take a peek at how these statistics interact. [SEMEngine, Table

10, is hosted free at http://www.nine-patch.com/download/SEMEngine.xlsm

and .xls.]

The SEM (red) is a much more stable statistic than the test

reliability (blue dot) across a range of student scores from 55% to 95% (Chart

17). Two scales are involved: a ratio scale of 0 to 1 and a normal scale of

right counts. The lowest trace (a ratio) on Chart 17 is inverted (second trace)

and then multiplied by the top trace (SDrow in counts) to yield the SEM in

counts.

Even more striking than the stability of the SEM (red) are

the parallel traces of the student score standard deviation (SDrow, green dot)

and the test reliability (KR20, blue dot). This makes sense. When the student

scores spread out, the Variance (MSrow) also increases, which increases the

test reliability (KR20). I was surprised to see the two so tightly related.

Chart 17 also includes the SQRT(1-KR20) (blue triangle).

This inverts the KR20 (blue dot). The stable SEM (red) then results from

multiplying this inverted value by the student score SDrow (green dot). This

makes sense. Multiplying a number by its reciprocal yields one; but in this

case, a two-step process includes two closely related numbers.

[In designing the forerunners of PUP, I discarded stable

statistics as IMHO they seemed to be of little descriptive value in the

classroom. That is not true for standardized tests where the goal is to use the

shortest test possible composed of discriminating items (no mastery or

unfinished items).]

The SEM engine now contains the first five of the six

statistics commonly used in education. In the next post I will explore the relationship

between the SEM of individual student scores and the SE of the mean of the

class score, the average test score. These have little meaning in the classroom

but are IMHO very important in understanding standardized testing.

[To use the Test Reliability and Standard Error of

Measurement Engine for other combinations than a 22 by 21 table requires

adjusting the central cell field and the values of N for student and item. Then

drag active cells over any new similar cells when you enlarge the cell field.

You may need to do additional tweaking. The percent Student Score Mean and Item

Difficulty Mean must be identical.

To reduce the cell field, use “Clear Contents” on the excess

columns and rows on the right and lower sides of the cell field. Include the six

cells that calculate SS that are below items and to the right of student

scores. Then manually reset the

number of students and items. You may need additional tweaking. The percent

Student Score Mean and Item Difficulty Mean must be identical.]

A password can be used to prevent unwanted changes to occur

in the SEMEngine.

- - - - - - - - - - - - - - - - - - - -

-

Free software to help you and your students

experience and understand how to break out of traditional-multiple choice (TMC)

and into Knowledge and Judgment Scoring (KJS) (tricycle to bicycle):